Querying GitHub Archive with Snowflake: The Essentials

These are the essential tips for querying GitHub’s ongoing history with Snowflake. Use these tips to explore and uncover insights within this 17 terabyte dataset, while avoiding common mistakes. GH Archive is now available free in the Snowflake Marketplace — so let’s get started.

Before joining Snowflake, I authored many posts on ways to analyze Github with BigQuery. Now that the GH Archive dataset is available in Snowflake (thanks to Cybersyn), I’m happy to share with you my top tips to enable your discovery journey.

Step 0: Import GitHub Archive shared by Cybersyn into your Snowflake account

To make a public dataset available in your Snowflake account, you need an ACCOUNTADMIN role to manually add it:

- Find the dataset at “app.snowflake.com/[…]/cybersyn-inc-cybersyn-github-archive”, and click on “Get”.

- Import it with an easy to remember database name. The automatic suggestion is

Cybersyn_GitHub_Archive, but I preferGHARCHIVE. - Make sure to make this imported share queryable with a role with less privileges than

ACCOUNTADMIN— for example,PUBLIC.

Step 1: Control your costs

Now we are ready to query — and since this is a 17 Terabyte dataset, it’s important to make sure that our queries can run efficiently and with no surprises.

Let’s review some of the best practices:

Resource monitors

A good ACCOUNTADMIN will have already set-up resource monitors appropriate for your organization use cases:

Query timeouts

Snowflake default query timeout is 48 hours, but that’s excessive for an interactive exploratory session. We can set up a limit for our session:

alter session set statement_timeout_in_seconds = 30;To make sure that this limit persists across sessions, you can also modify it for your own username:

alter user felipe set statement_timeout_in_seconds = 30;Right-size warehouse

In my own Snowflake account, I usually play within a Small Virtual Warehouse. If you are sharing an account with more teammates, it might make sense to use a larger warehouse for parallelism. In general avoid using a very large warehouse just for yourself — unless you are running a large extract job.

use warehouse s;Or do everything in a X-Small warehouse, and learn to trust the Snowflake Query Acceleration Service for large extract jobs. (We can explore this further in an upcoming post).

Step 2: Efficient queries

Since this is a 17 TB dataset, make sure your queries are efficient — and do your best to avoid full table scans.

Don’t select without a LIMIT

One of the following select * errs with a timeout, the other one takes only a second:

select *

from gharchive.cybersyn.gh_events

-- Statement reached its statement or warehouse timeout of 30 second(s) and was canceled.

;

select *

from gharchive.cybersyn.gh_events

limit 100

-- 1.2s

;Prune in time

Instead of going throughout random sections of the gigantic table, prune your queries to select days:

select count(distinct actor_id)

from gharchive.cybersyn.gh_events

where date(created_at_timestamp) between '2023-08-01' and '2023-08-03'

-- < 1s, when hot

-- 1,269,789 distinct users

;Use the right timestamp column

Always prefer using created_at_timestamp, instead of the column created_at. The latter one is a string (from the original dataset), while created_at_timestamp is a proper timestamp (as transformed by Cybersyn):

select count(distinct actor_id)

from gharchive.cybersyn.gh_events

where date(created_at_timestamp) between '2023-08-01' and '2023-08-03'

-- < 1s, when hot

-- (prefer this)

;

select count(distinct actor_id)

from gharchive.cybersyn.gh_events

where date(created_at) between '2023-08-01' and '2023-08-03'

-- Error: Date '2012/03/10 22:55:00 -0800' is not recognized

;

select count(distinct actor_id)

from gharchive.cybersyn.gh_events

where try_cast(created_at as date) between '2023-08-01' and '2023-08-03'

-- Error: Statement reached its statement or warehouse timeout of 30 second(s) and was canceled.

;

select count(distinct actor_id)

from gharchive.cybersyn.gh_events

where left(created_at, 10) between '2023-08-01' and '2023-08-03'

-- < 1s, when hot

-- (a string will prune, but it's harder to treat it as a timestampt)

;Extract project history to a new table

To analyze the whole history of a collection of projects (or a single one), extract all the related rows to a new table. From then on, just query that smaller table.

For example, to extract all rows related to the Apache Iceberg project throughout the history of GitHub, do something like:

create or replace table gharchive_iceberg

as

select *

from gharchive.cybersyn.gh_events

where repo_id = 158256479

order by created_at_timestamp

-- 21m 44s small

-- 46s 2xlarge

-- 67s xsmall with query acceleration serviceAs you can see above the query timeout limit of 30s will be a problem for this extraction — but once you’ve done it, all the queries on the smaller will run way faster.

To extract all events for Apache Hudi, Apache Iceberg, and Delta since 2018:

create table gharchive_iceberg_delta_hudi

as

select *

from gharchive.cybersyn.gh_events

where created_at_timestamp > '2018-01-01'

and (

repo_id in (182849188, 158256479, 76474200)

or org_id = 49767398

)

order by repo_id, created_at_timestamp

-- 11m32s XL

;Notice the order by is useful when creating a new table to keep the rows sorted by date, which helps with further pruning.

Step 3: Correct queries

When analyzing GH Archive you should double check your results, and make sure that they reflect reality. Find some best practices here:

Use repo_id instead of repo_name

Using repo_name is more convenient than the numeric repo_id, but projects change names through their history.

For example, Hudi used to be an Uber project, then an Apache incubated project, and now a proper Apache project:

select repo_id, repo_name, min(created_at)

from gharchive_iceberg_delta_hudi

where repo_id in (158256479, 76474200, 182849188)

group by 1, 2

order by 1, 3;

Get the latest repo_name with max_by()

To get the latest name of a repo, use the new Snowflake SQL function max_by(). In the following example max_by(repo_name, created_at_timestamp) finds the max timestamp for each repo_id, and outputs the repo_name for that row.

select repo_id, max_by(repo_name, created_at_timestamp) repo_name

from gharchive.cybersyn.gh_events

where repo_id in (158256479, 76474200, 182849188)

and created_at_timestamp > current_date - 5

group by 1;

Note that the above query runs fast even on the full 17TB table — as we are limiting the scan to only the last 5 days.

Be careful when counting stars — dedup

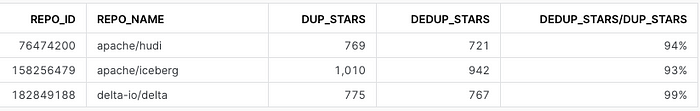

Most projects have duplicated stars — as the same person can register the same “star” event many times. You should de-duplicate these events using distinct actor_id:

select repo_id, max_by(repo_name, created_at) repo_name

, count(*) dup_stars

, count(distinct actor_id) dedup_stars

, dedup_stars/dup_stars

from gharchive_iceberg_delta_hudi

where repo_id in (158256479, 76474200, 182849188)

and type = 'WatchEvent'

and created_at_timestamp > '2023-01-01'

group by 1

order by 1, 3;

You can see that Iceberg has the largest % of duplicated stars during 2023 — but even after de-duplicating it’s the project with the most stars during this time period.

For the same reason avoid using the summary table GH_STARS that Cybersyn provides. It’s useful and fast — but it will produce wrong results when adding numbers.

How to make the table GH_STARS more useful

Cybersyn created the table GH_STARS for users to save time while counting stars, but you’ll get wrong results if you just add the daily counts naively (as seen above).

This is what the table contains today:

GH_STARSThe problem is that each day might count the same username adding the same star to the same project more than once. To get the correct results, we need to de-duplicate those. That can take a lot of resources, but there’s a solution:

- Cybersyn could add a column with an HLL sketch (a compact probabilistic representation) of the ids of the users that starred that project that day. Then you could combine those sketches to get a better approximate count. (We can review this deeper in a future post).

Compared with BigQuery

Since you can find GH Archive on Snowflake and BigQuery, it can be interesting to double-check the results of the same query on both repos.

That’s how I discovered that BigQuery has many issues with duplicated and missing rows — probably due to their ingestion pipeline. For example, you can check that BigQuery is missing data for 2022-12-20 02:0000, and also has many duplicated rows:

select id, count(*) c

from gharchive_iceberg

group by id

having c > 1

limit 10;

-- 3 duplicated rows in Snowflake

-- 4,456 duplicated rows in BigQuery

As Cybersyn runs a different pipeline to load the files published by GH Archive, Snowflake’s copy of the data appears more reliable than the one hosted in BigQuery.

Next steps

- Keep coming back here, as I’ll add links to interesting posts using this data from teammates and the community.

- Run your own analysis, and share your results with us.

Want more?

Try this out with a Snowflake free trial account — you only need an email address to get started.

I’m Felipe Hoffa, Data Cloud Advocate for Snowflake. Thanks for joining me on this adventure. You can follow me on Twitter and LinkedIn. And subscribe to reddit.com/r/snowflake for the most interesting Snowflake news.